关于 D4PG 的疑问

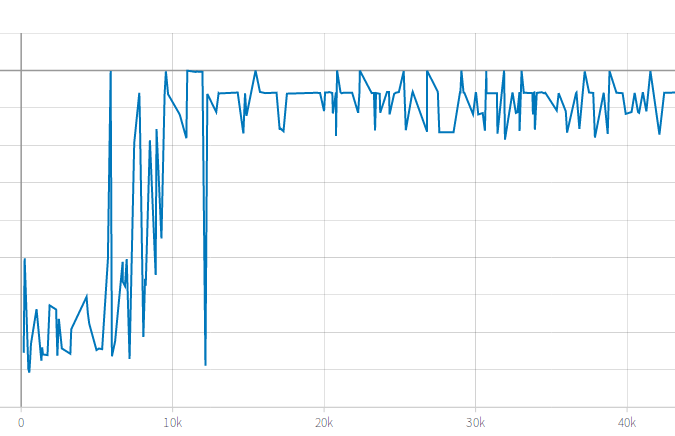

1. sample efficiency 如此之低?

2. v_min = -20.0 and gamma = 0.99 也行?

D4PG 结果的优点

分值高且稳定

1. distributed 分布式

多个 actor (3)

priority experience replay

2. training times

Training Episode 10000

SAVE_CKPT_STEP = 10000 # Save checkpoint every save_ckpt_step training steps

noise process

NOISE_SCALE = 0.3 # Scaling to apply to Gaussian noise

NOISE_DECAY = 0.9999 # Decay noise throughout training by scaling by noise_decay**training_step

N_STEP_RETURNS = 5

DISCOUNT_RATE = 0.99

CRITIC_LEARNING_RATE = 0.0001

ACTOR_LEARNING_RATE = 0.0001

NUM_ATOMS = 51

STATE_DIMS = dummy_env.get_state_dims()

STATE_BOUND_LOW, STATE_BOUND_HIGH = dummy_env.get_state_bounds()

ACTION_DIMS = dummy_env.get_action_dims()

ACTION_BOUND_LOW, ACTION_BOUND_HIGH = dummy_env.get_action_bounds()

V_MIN = dummy_env.v_min

V_MAX = dummy_env.v_max

del dummy_env

3. batch normalization

USE_BATCH_NORM = False

墨之科技,版权所有 © Copyright 2017-2027

湘ICP备14012786号 邮箱:ai@inksci.com